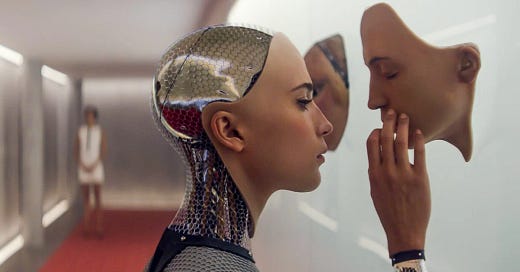

Is Artificial Intelligence making me stupid?

A study by Microsoft and Carnegie Mellon supports my personal experiences of cognitive degradation.

Good morning, and welcome to the first email in a long time.

I don’t know how regular these emails will be; I’ll write when I have something to say. No rules. But since the world is upside down, there are quite a few topics to cover.

I’ll start with a personal observation: AI is making me stupid.

And as someone who is paid to think, I know this is not good.

Let’s start with some background:

Understanding and implementing emerging technology has been a large part of my job as a strategist for 15 years. AI is just the next technology to be embraced. I approached it like I’ve done everything else: developing a theoretical understanding while testing it in as many settings as possible.

I use lots of different AI tools in my daily life. I write code with Cursor and texts with Claude; I search with Exa and Perplexity and analyse data with ChatGPT.

Still, I’ve felt for quite some time that using AI significantly impacts my ability to think challenging independent thoughts.

I read the summary instead of the full article.

I ask for potential angles and outlines when writing a text.

I get data patterns on a platter instead of finding them myself.

And little by little, my brain degrades.

Self-reported cognitive degradation related to AI is not unique to me. Other knowledge workers experience it, too, according to a study from Microsoft and Carnegie Mellon: The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers (Lee et al., 2025).

The researchers surveyed 319 knowledge workers about their critical thinking efforts when using generative AI across 936 first-hand examples of using GenAI in work tasks. This is one of the first studies on this topic, but it is unlikely to be the last.

You should read the paper (to keep your brain from rotting), but here are a few quotes:

“Confidence in AI is associated with reduced critical thinking effort, while self-confidence is associated with increased critical thinking effort.”

In other words:

We think less critically when we believe AI is better than us at a task.

When we are confident that we are better than AI, we think more critically.

However, as AI abilities continually improve, the space where humans are more confident than AI will shrink, making us think less critically.

Also:

“… while GenAI can improve worker efficiency, it can inhibit critical engagement with work and can potentially lead to long-term overreliance on the tool and diminished skill for independent problem-solving.”

This means that the researchers see a risk of our cognitive abilities to solve problems worsening over time if we rely on Generative AI tools to do those tasks for us.

I think the most interesting topic is the long-term risk of collective cognitive degradation. Are we at risk of becoming increasingly stupid as a society?

The researchers do not expand on this risk, I guess, since that would be speculative. However, they seem to think we should consider the risk of reduced critical thinking when designing Generative AI tools. They write:

“Knowledge workers face new challenges in critical thinking as they incorporate GenAI into their knowledge workflows. To that end, our work suggests that GenAI tools need to be designed to support knowledge workers’ critical thinking by addressing their awareness, motivation, and ability barriers.”

This is interesting. As a digital strategist, I understand we will see a colossal AI wave impacting all aspects of society. And in many areas, AI complements human abilities rather than replacing them, such as cancer detection. That is great.

However, we also see a push to increase the use of Generative AI among the general population. Widespread digital technology usage has always been a good thing in modern societies. But is that the best long-term path forward?

Many argue that there is no difference between using generative AI, a calculator instead of pen and paper, or Google instead of an encyclopedia. Throughout history, technological tools have transformed our thinking without necessarily degrading our cognitive abilities. Suggesting that they change the nature of the skills we prioritise rather than diminish our intellectual capacity.

Still, I am probably much worse at solving mathematical equations today than without the calculator. Because of the calculator, solving equations is not something I practice regularly.

We should probably reflect on the skills we hand over to machines, even more so when machines rapidly overtake average human performance on a significant share of the tasks.

I’m not saying this because I worry about jobs. I’m saying this because I worry about human abilities.

Humans have thrived in this world due to our cognitive abilities. But our brains are lazy, so outsourcing a lot of thinking might be tempting. And what happens if we don’t practice thinking?

Outsourcing tasks to AI makes perfect sense in a world obsessed with efficiency. There's no doubt that using AI increases my productivity - how could it not when I'm essentially delegating work to a tireless machine that often matches my own output quality?

But efficiency isn't everything. As someone who thinks for a living, I'm increasingly concerned about what we lose when prioritising speed over cognitive development. The parallel with social media is telling: despite clear evidence that excessive scrolling damages our ability to form meaningful relationships, we spend over two hours daily on these platforms.

This pattern of choosing convenience over capability should worry us. Will parents limit their children's AI use to protect their developing minds? Will specific professional or educational communities start rejecting AI tools to preserve their cognitive edge? These aren't theoretical questions – they're choices we must make soon.

The hard truth is that most of us are terrible at making choices that benefit us in the long run, even when we understand the consequences. Our brains are fundamentally lazy, always seeking the path of least resistance. And that's what makes AI so seductive – and so precarious. It's not just another productivity tool; it might be the ultimate enabler of our cognitive laziness.

I don't have the answers.

But I know that every time I let AI do my thinking, I'm choosing the kind of thinker I want to be. Maybe it's time we all started making that choice more consciously.

Great Article (mail) Anna.

I havent read a full article (without AI summary) for a long time.

Looking forward for your next "Critical Thoughts"

Great debut post Anna. Welcome! Let me start by saying that I'm most concerned about how AI will affect young(er) people whose brains and thinking skills are not fully formed yet, using it to cut corners. As for the rest of us, I think the future belongs to those who can get the best out of AI while protecting their mental faculties. I think it is possible, but we also need to come out of our silos and compare notes more. We need to band together more as humans, to preserve what might be lost if we don't.